Oracle Database On-Premises to Azure Data Lake Storage for Microsoft Fabric: Real-Time Data Ingestion, Migration, and Streaming using Oracle GoldenGate

Author: Ozwen Coelho | 6 min read | February 11, 2025

Nowadays, organization need to migrate and move data from Oracle database to various data lakes, such as Microsoft Fabric and Snowflake. This data lakes are used in Data Analytics and by Data Scientists which help organizations to process vast amounts of data in real time or batch, derive insights, and make data-driven decisions.

Oracle GoldenGate is very powerful tool to capture and replicate data, followed by converting it into various format, e.g. Json/parquet.Let’s dive into using Oracle GoldenGate for the extraction of data, configuring the replication process for distributed applications/analytics, and then converting data into Json/parquet format.

Environment Detail (Source): RHEL(8.10) || 19c (19.25) Oracle Database (GoldenGate binaries for Oracle (extract process) and GoldenGate binaries for distributed applications/analytics (Replicate process)

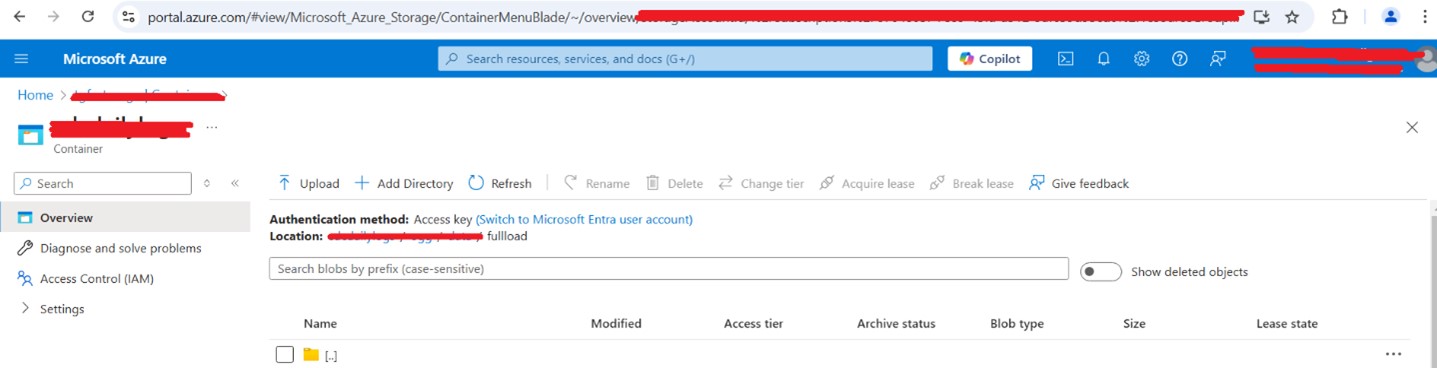

Environment Detail (Target): Azure Cloud || ADLS || Microsoft Azure BLOB storage

(Step 1) Download GoldenGate Binaries for Linux and for Distributed Applications and Analytics

Oracle GoldenGate Downloads

(Step 2) Install GoldenGate Binaries for Linux and for Distributed Applications and Analytics

/u01/app/oracle/product/GG/23_db || GG Binaries for Linux /u01/app/oracle/product/GG/23_az || GG Binaries for Distributed Applications and Analytics

(Step 3) Apply Patches to the GoldenGate Binaries to Be on the Latest Version

Applied patch#37071319 using command “opatch apply” on GG Binaries for Linux(/u01/app/oracle/product/GG/23_db) Applied patch#37108354 using command “opatch apply” on GG Binaries for Distributed Applications and Analytics (/u01/app/oracle/product/GG/23_az)

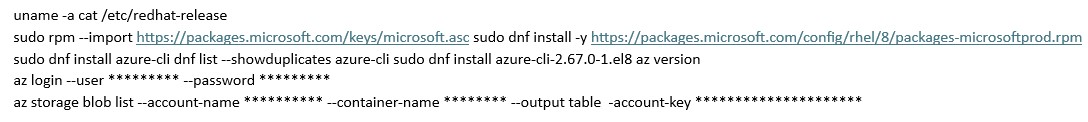

(Step 4) Configure Az Tool to Test Connectivity from On-premises to Azure Portal for Storage Account

(Step 5) Perform Prep Work on Oracle Database

Enable archivelog || alter database archivelog;

Enable Force logging || alter database force logging;

Enabled Supplemental Logging || alter database add supplemental log data;

Enable Golden Gate replication || alter system set enable_goldengate_replication=true;

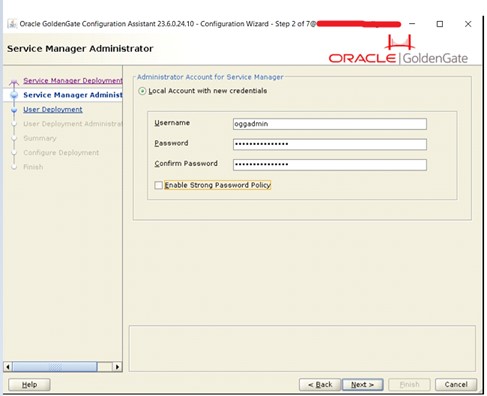

Create GG user || create user oggadmin identified by "*********"; Grant required permission || exec

dbms_goldengate_auth.grant_admin_privilege('oggadmin');

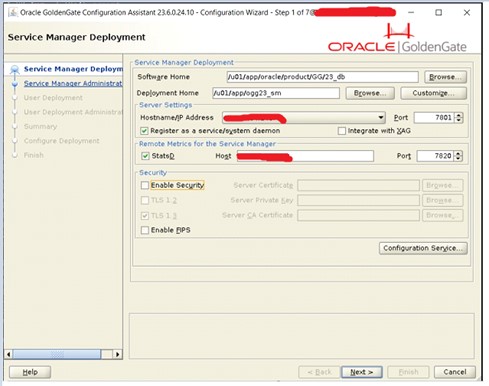

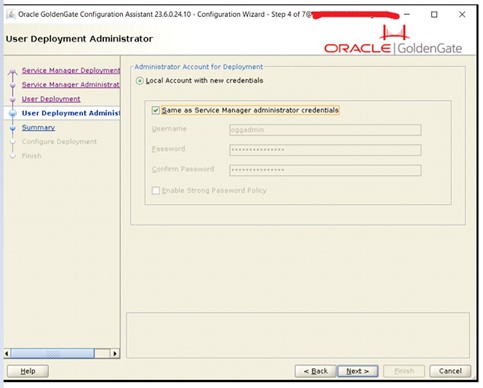

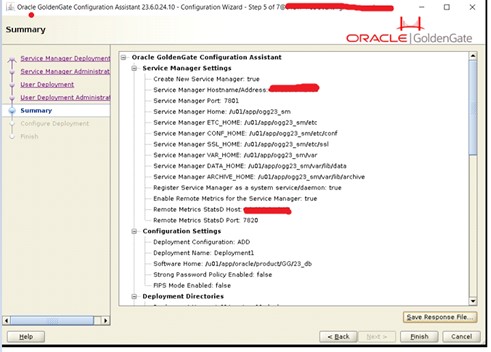

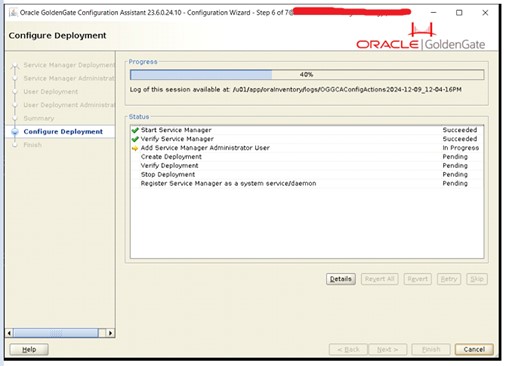

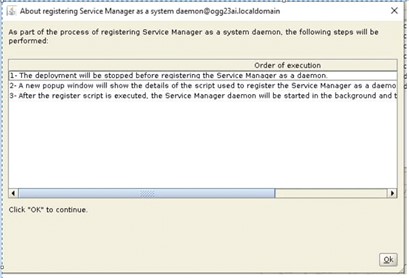

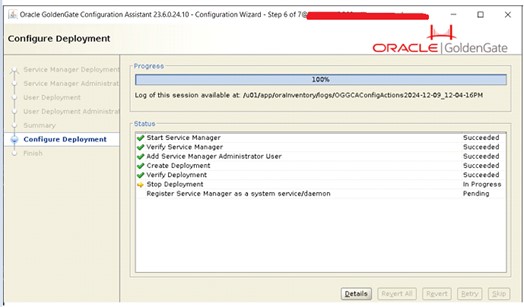

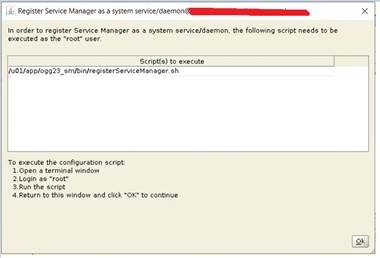

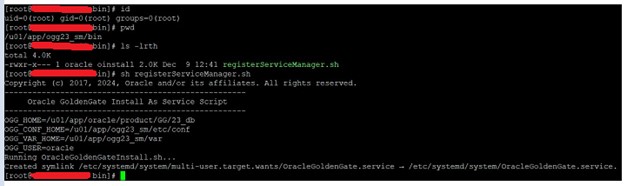

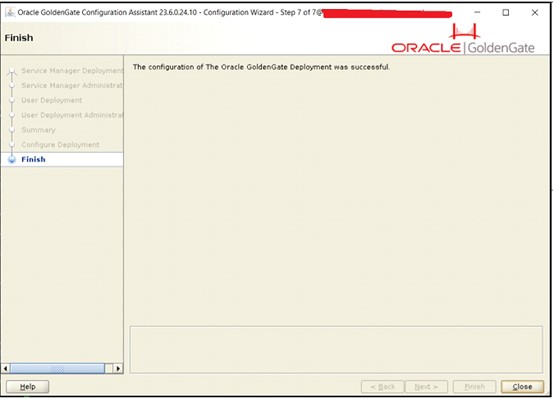

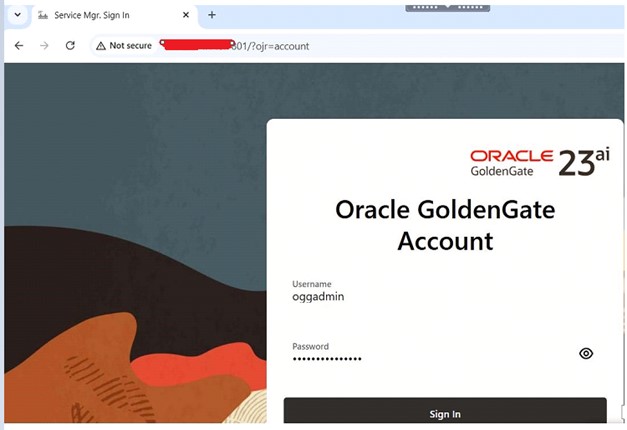

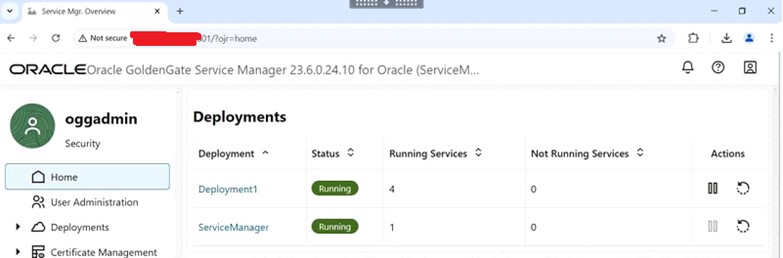

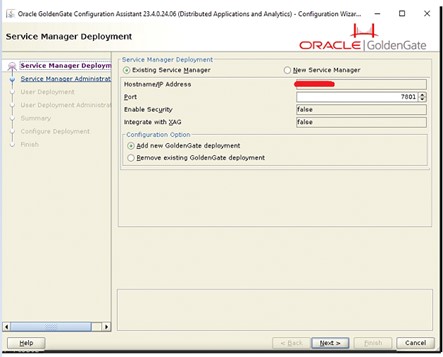

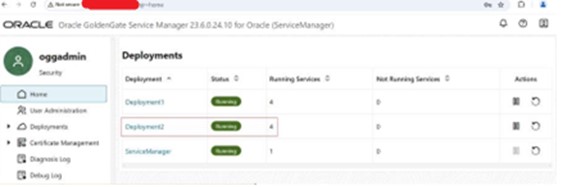

(Step 6) Configure Golden Gate 23c Service Manager and Create a Deployment /u01/app/oracle/product/GG/23_db/bin/oggca.sh

/u01/app/oracle/product/GG/23_db/bin/oggca.sh

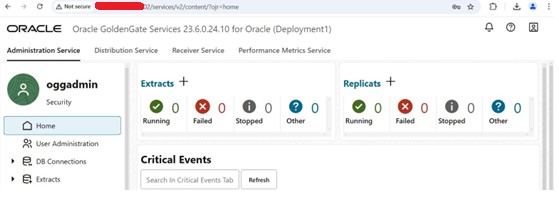

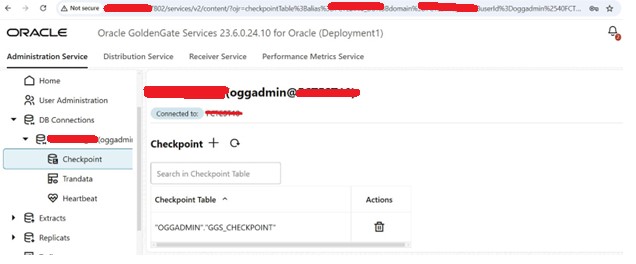

(Step 7) Prep Work for Extraction – Add a Database, Connection, Transdata, Checkpoint Table, Heartbeat Table, Extract Group Addition, and Registration

Add an Online Extract

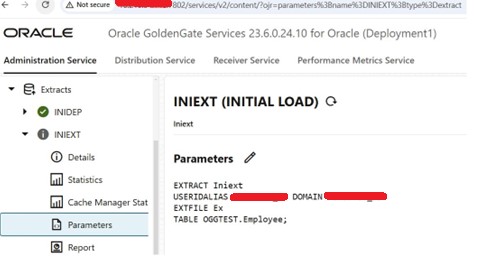

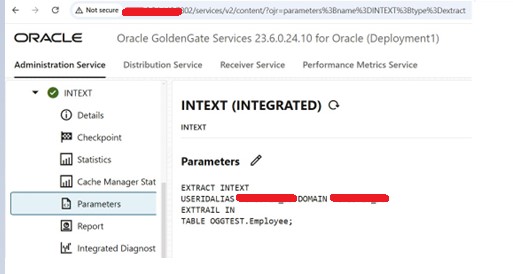

(Step 8) Configure the Extraction Process for Table (Initial Load + Continuous Replication) Initial load

Continuous replication

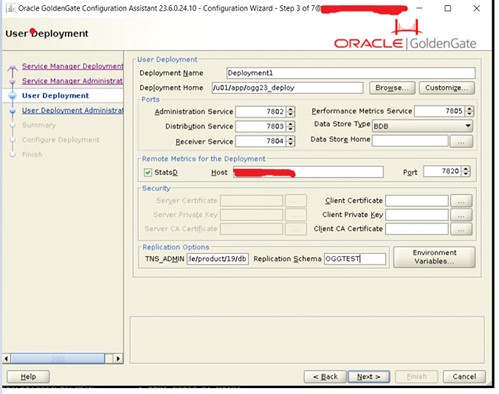

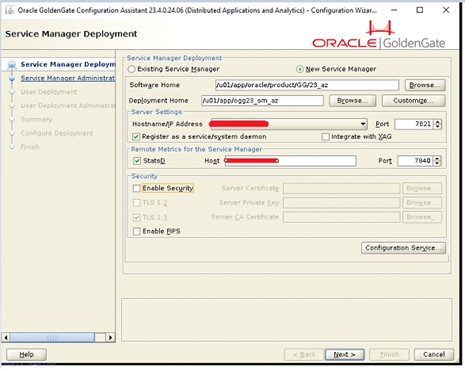

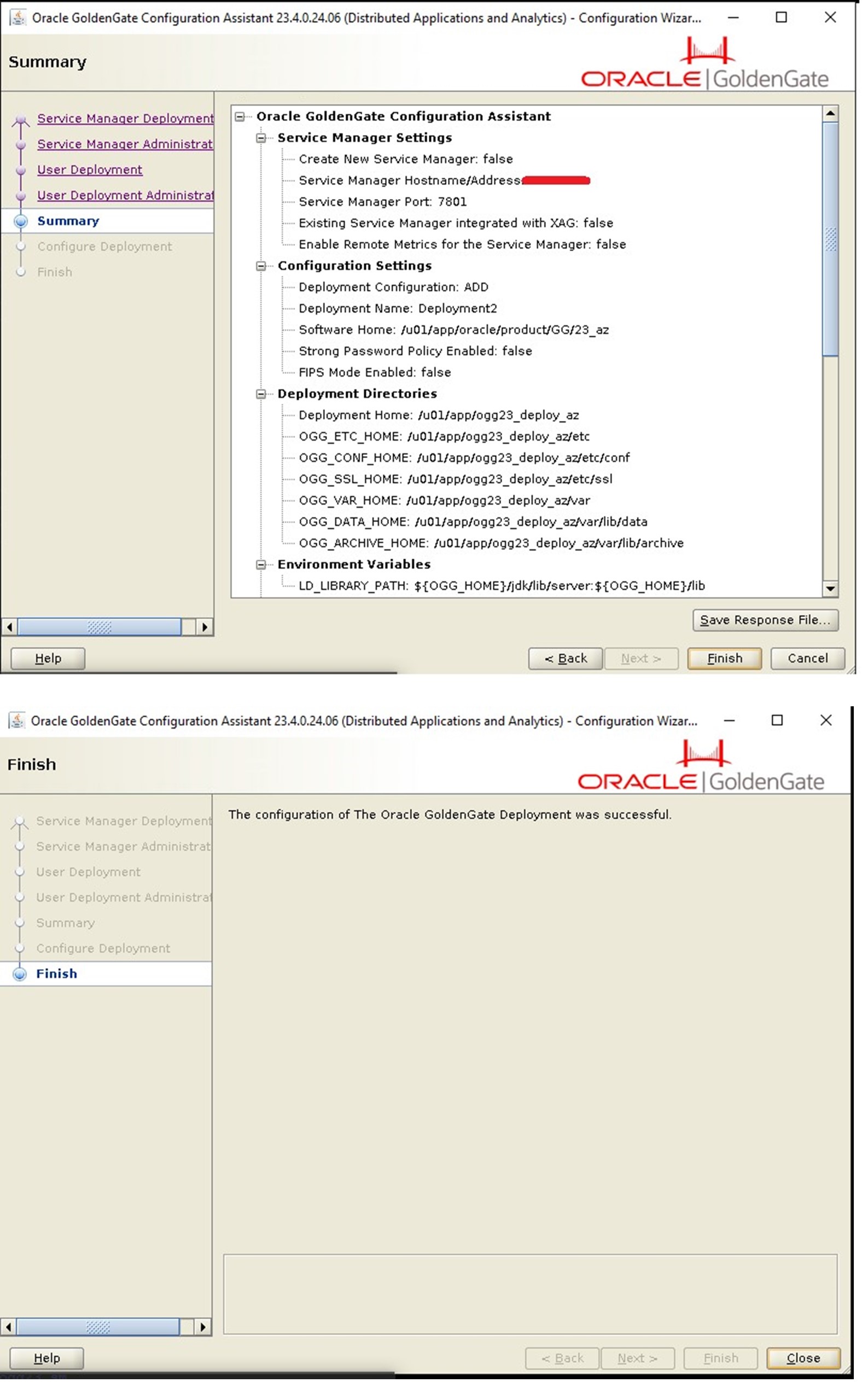

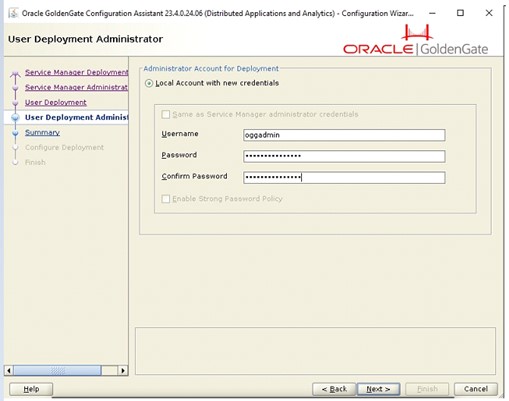

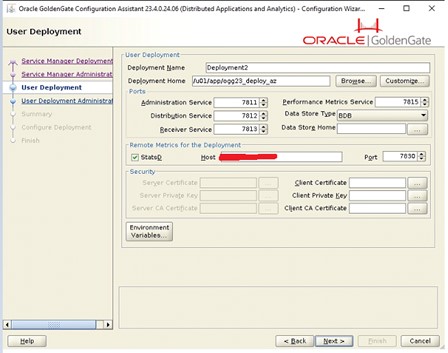

(Step 9) Configure Deployment for Distributed Applications and Analytics

/u01/app/oracle/product/GG/23_az/bin/oggca.sh

(Step 10) Install Dependencies (This is Required to Create Replicated in Parquet)

https://docs.oracle.com/en/middleware/goldengate/big-data/23/gadbd/qs-realtimeparquet-ingestion-azure-data-lake-storage-goldengate-daa.html#GUID-75E81FD0-0DF4418A-9643-8F63243DB74C

[oracle@******* DependencyDownloader]$ which java /usr/bin/java [oracle@******* DependencyDownloader]$ pwd /u01/app/oracle/product/GG/23_az/opt/DependencyDownloader [oracle@******* DependencyDownloader]$ ./parquet.sh 1.15.0 || https://search.maven.org/artifact/org.apache.parquet/parquethadoop ./hadoop.sh 3.4.1 || https://search.maven.org/artifact/org.apache.hadoop/hadoop-azure ./azure_blob_storage.sh 12.29.0 || https://search.maven.org/artifact/com.azure/azurestorage-blob

Copy the downloaded files from above to /u01/app/oracle/product/GG/oggjar/

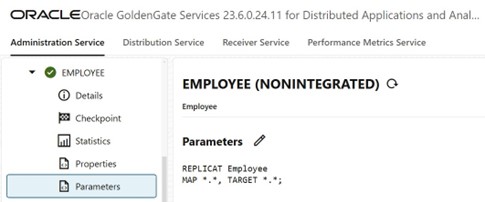

(Step 11) Configure Replicate Process to Push Data to Azure Blob Storage

Properties files:

#The File Writer Handler – no need to change gg.handlerlist=filewriter gg.handler.filewriter.type=filewriter gg.handler.filewriter.mode=op gg.handler.filewriter.pathMappingTemplate=./dirout gg.handler.filewriter.stateFileDirectory=./dirsta gg.handler.filewriter.fileRollInterval=7m gg.handler.filewriter.inactivityRollInterval=5s gg.handler.filewriter.fileWriteActiveSuffix=.tmp gg.handler.filewriter.finalizeAction=delete

### Avro OCF – no need to change

gg.handler.filewriter.format=avro_row_ocf

gg.handler.filewriter.fileNameMappingTemplate=${groupName}_${fullyQualifiedTableName}_${currentTimestamp}.avro

gg.handler.filewriter.format.pkUpdateHandling=delete-insert

gg.handler.filewriter.format.metaColumnsTemplate=${optype},${position}

gg.handler.filewriter.format.iso8601Format=false

gg.handler.filewriter.partitionByTable=true

gg.handler.filewriter.rollOnShutdown=true

#The Parquet Event Handler – no need to change

gg.handler.filewriter.eventHandler=parquet

gg.eventhandler.parquet.type=parquet

gg.eventhandler.parquet.pathMappingTemplate=./dirparquet

gg.eventhandler.parquet.fileNameMappingTemplate=${groupName}_${fullyQualifiedTableName}_${currentTimestamp}.parquet

gg.eventhandler.parquet.writeToHDFS=false

gg.eventhandler.parquet.finalizeAction=delete

#TODO Select ABS Event Handler – no need to change gg.eventhandler.parquet.eventHandler=abs

#TODO Set ABS Event Handler - please update as needed

gg.eventhandler.abs.type=abs

gg.eventhandler.abs.bucketMappingTemplate=******

gg.eventhandler.abs.pathMappingTemplate=ogg/data/fullload/${fullyQualifiedTableName}

gg.eventhandler.abs.accountName=*********

#TODO: Edit the Azure storage account key if access key is used

gg.eventhandler.abs.accountKey=************

#TODO: Edit the Azure shared access signature (SAS) to if SAS is used.

#gg.eventhandler.abs.sasToken=

#TODO: Edit the tenant ID, Client ID and Secret of the application if LDAP is used.

#gg.eventhandler.abs.tenantId=

#gg.eventhandler.abs.clientId=

#gg.eventhandler.abs.clientSecret=

#TODO Set the classpath to the paths you noted in step1 gg.classpath=/u01/app/oracle/product/GG/oggjar/* jvm.bootoptions=-Xmx512m -Xms32m

Want to connect with the author or explore other database resources? Visit Datavail.com for more.