SQL Server: In-Memory and Durable. How is That Possible?

Author: Pinal Dave | 4 min read | February 10, 2015

One of the major myths about SQL Server In-Memory OLTP is that since the data resides in memory, it would be lost once SQL Server is restarted. Well, as we mentioned – it is a myth. In reality, SQL Server guarantees changes made to durable tables are permanent and can survive a database or SQL Server restart.

To understand the durability of data in SQL Server, we need to recall the concept of transaction logging. Whenever there is a modification done for disk based table or durable in-memory table, it would generate one or more log records. These log records are hardened in the transaction log file (also known as T-Log or LDF file) before commit is confirmed by SQL Server to the client application. Notice that we’ve said “durable” in-memory table. This is because SQL Server In-Memory OLTP also supports non-durable in-memory tables. From this point onwards, if we say, “in-memory” it should be assumed as “durable in-memory table,” unless otherwise specified.

There are some differences in disk-based table logging and in-memory table logging. In case of disk based table committed and uncommitted changes are logged which is different for in-memory table. Whenever there is any change committed to in-memory table, it is logged to a transaction log file. The format of log records is different for in-memory.

In-Memory by code

Have a look at a demo to understand the logging performed by in-memory OLTP. First, create the database which contains in-memory table.

CREATE DATABASE InMemoryDB ON PRIMARY ( NAME = [InMemoryDB_data],FILENAME = 'C:IMDBInMemoryDB.mdf' ) ,FILEGROUP [InMemoryDB_FG] CONTAINS MEMORY_OPTIMIZED_DATA ( NAME = [InMemoryDB_dir],FILENAME = 'C:IMDBInMemoryDB_dir' ) LOG ON ( NAME = [InMemoryDB_log] ,Filename = 'C:IMDBInMemoryDB_log.ldf',SIZE = 102400KB )

The key to the above code is the place where we specify the InMemory OLTP filegroup with (CONTAINS MEMORY_OPTIMIZED_DATA). Now, we will create a table in this database.

Use InMemoryDB GO CREATE TABLE InMemoryTable ( ID INT NOT NULL PRIMARY KEY NONCLUSTERED HASH WITH (BUCKET_COUNT = 100000), FirstName CHAR(20) NOT NULL, LastName CHAR(20) NOT NULL ) WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_AND_DATA) GO

Let’s insert 100 rows in the above table in a transaction.

Use InMemoryDB

GO

set nocount on

go

BEGIN TRAN

DECLARE @i INT

SET @i = 1

WHILE @i <= 100

BEGIN

INSERT INTO InMemoryTable

VALUES (@i, 'FirstName'+CAST(@i as varchar(10)),'LastName'+CAST(@i as varchar(10)))

SET @i = @i + 1

END

COMMIT TRAN

Now, we can read transaction log to check the logging which is performed.

Use InMemoryDB GO SELECT top 3 * FROM sys.fn_dblog(NULL, NULL) ORDER BY [Current LSN] desc GO

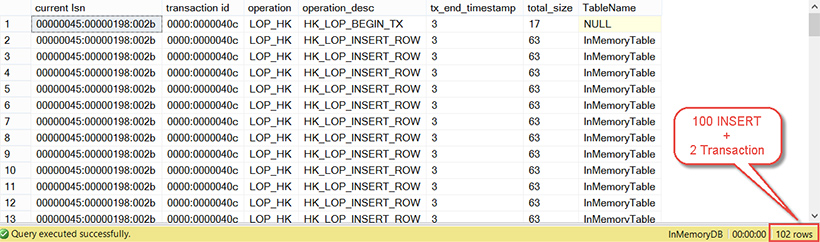

Here we can see operation “LOP_HK” in which HK stands for Hekaton operation. Hekaton was the code name of this project when Microsoft was developing it. If we pick the LSN which is highlighted and use that to break apart the 6380 byte record using fn_dblog_xtp undocumented command.

Use InMemoryDB GO SELECT [current lsn], [transaction id], operation, operation_desc, tx_end_timestamp, total_size, object_name(table_id) AS TableName FROM sys.fn_dblog_xtp(null, null) WHERE [Current LSN] = '00000045:00000198:002b'

We can see 100 inserts, which we performed in loop.

By looking at the above demo, we can point out few salient points about logging of In-Memory OLTP in SQL Server:

- Multiple changes in the table are merged into single log record. We demonstrated this above by showing that 1 record = 100 mini records.

- Log records are not generated for any modification of Index. The index on the table is rebuilt during startup of the database. In the demo, we can see that we don’t have any index modification written in the transaction log. We only see “HK_LOP_INSERT_ROW”.

- Transaction log records are written only if the transaction on in-memory table is committed. This means that no UNDO information would be logged. This can be done by trying the same demo and using rollback instead of commit.

Due to all of the above reasons, logging is more efficient for in-memory tables as compared to disk based tables and since it is logged in transaction log, it is durable and persisted.

As a closing note, I should point out that in-memory tables can be made non-durable as well. This is done by defining durability = “SCHEMA_ONLY” while creating the table. This option is useful in situation where we want lightning fast inserts for staging tables used in loading phase of Data warehouses, ASP Session state database, or Temporary logging tables. In all these cases, data can be reconstructed and not very important to store permanently. In this blog, we saw how InMemory OLTP is durable when working with SQL Server 2014.